Download ~35Mbps, upload ~8Mbps and the engineer was here about 20 minutes. Very, very happy.

Category Archives: Internet

Two Days to Infinity

BT parcel arrived today (I think it’s the Home Hub), and I’m still expecting the engineer on Wednesday. Our connection will go dark sometime on the 9th but hopefully be back the same day.

Fingers crossed.

ADSL All Change

In our previous house, we had a cable modem, but despite there being a green Cable box right outside the wall of the new house, there’s no cable service in our street. I checked with Virgin Cable but it wasn’t commercially viable to do the street any more. So we signed up for ADSL. At the time, I wanted a reliable service, and I’d heard good things about Nildram, so I signed up with them.

It wasn’t the cheapest option, by a long chalk, but it was reliable, in four years we had no noticeable outage. Nildram was eventually purchased by Pipex but nothing really changed. Then Pipex were bought up by Tiscali, and not much changed. Then Tiscali were bought up by Opal. who may have been part of TalkTalk or were later renamed to TalkTalk business or something like that. Once that happened, the service went downhill.

Overnight disconnects, variable performance, disconnects during the day, etc. However, if you’ve met me you know that I am super resistant to change. Not because I don’t think change can be good, but because I’m basically lazy. I’ll tolerate ‘good enough’ vs ‘much better’ if good enough involves no effort. Eventually though, there’s a tipping point and I’ll initiate change.

I’ve been looking at BT Infinity since it was first announced – cable-like speeds using VDSL. It’s still variable speed, because your distance from the cabinet has an impact but it’s significantly better than most ADSL. My ADSL connection is 6-7Mbps, BT Infinity suggested I might get 34Mbps.

So anyway – about 3 weeks ago we noticed the ‘net connection was being a bit odd. Sending tweets wouldn’t work, web pages would half load, some stuff wouldn’t connect first time. Throughput was okay once you got a connection, I could still get 5-600KB/s, but it would sometimes reqiure two or three page refreshes to load a full web page.

It came to a head when I worked at home one day, and my VPN connection to the office was atrocious. I couldn’t send or receive any mail and got about 2KB/s transfer when trying to send and receive files. Clearly, the underlying VPN connection suffered more from whatever was causing the connection issues in general.

Did I call TalkTalk? No. For two reasons. Firstly, someone else at work mentioned exactly the same behaviour on their TalkTalk connection, and they knew of 1 other person with the same issue as well. At the same point, I found someone living in a completely different part of the country on TalkTalk who had the same problem. The second reason, and you can lambaste me if you like, is that I knew how the conversation would go.

Me: I’m having an issue – description of problem.

Customer Service Rep: Okay, I have a checklist of things you’ve already done, such as rebooting your ADSL router, taking all other devices out of the equation, etc., etc.

Me: But I know other people with the exact same issue around the country, and I’ve tried those things, and it feels to me like packet loss or traffic shaping gone bad, and my line quality values haven’t changed because I track them.

Customer Service Rep: Okay, but can you please work through this checklist of things.

Me: Sigh.

I spend my job fixing technical issues, and advising other people how to fix them, and I know very well how critical it is that you follow a logical investigative process and make no assumptions. But when I ring a call centre I don’t want to hear that shit, I want to speak to someone technical so we can talk about it. I know I can’t, I know it’s wrong, but I can’t help it.

It’s like being a retired garage mechanic and taking your car to your local garage, and having to try and explain why you know what the problem is while they ask you what colour the paintwork is.

So no, I didn’t call TalkTalk, but I did decide to move to BT Infinity. Ten minutes through the Infinity signup and I remembered I needed my MAC. To give them their due, TalkTalk provided it well within the 5 working day estimate. On Tuesday of this week I ordered BT Infinity and they gave me a delivery and installation date of next Wednesday!

So I’m excited but of course, because of who I am, I’m also planning! I used to have a fixed IP address with Nildram but that’s going to go, so I’ll need to re-work the security on my VPS and web sites. I’ll need to either run a long cat5 cable between the VDSL modem and the BT Home Hub, or get a new network switch, or maybe both. Is there going to be room for both boxes near the phone? Will the engineer be helpful and put the Home Hub in a different room? Argh!

So, all change to something many people consider a luxury, but for many reasons, something we consider vital. I’ll let you know how it goes, over 3G if it goes badly.

Untidy Blog

Meh, transferred (or half transferred) various blog posts, forum posts and stuff from various sources to here, before I shut down some other websites I host, but as usual, got bored half way through so now I have a bunch of old, badly tagged, wrongly formatted stuff hanging around. To make it worse, I’m not sure how far through the process I got, so there may be only half the posts I want to transfer but no easy way to find them amongst the 1386 (soon to be 1397 when I hit post) items on the blog.

Oh well, must devote some of my impending holiday to sorting this place out (in lieu of sorting anything in the meatverse out).

Stop Worrying about the Internet

This was true when Douglas Adams wrote it, and it’s true now, but for subtly different reasons.

Everyone should read it.

How to Stop Worrying and Learn to Love the Internet

Selective quote,

We are natural villagers. For most of mankind’s history we have lived in very small communities in which we knew everybody and everybody knew us. But gradually there grew to be far too many of us, and our communities became too large and disparate for us to be able to feel a part of them, and our technologies were unequal to the task of drawing us together. But that is changing.

Interactivity. Many-to-many communications. Pervasive networking. These are cumbersome new terms for elements in our lives so fundamental that, before we lost them, we didn’t even know to have names for them.

Victory!

When we got our Windows 7 PC’s earlier in the year, I was really careful to take backups of absolutely everything before we wiped our old PC’s. In fact, I ended up with about 3 backups of everything in several locations. However, due to some issues with hardware, messing about, and frustration, I ended up losing my Picasa albums. Not the ones on the web, but the definitions of albums on local disk.

I wasn’t too worried initially, I had all the photo’s. Over time though I got more and more annoyed that there’s no easy way to re-link a web based album with Picasa on the PC. No way to say – import this album and it’s settings, and relink to all the photos. Very annoying.

Every time I start up Picasa I get a little twinge of annoyance. Since I was painting some mini’s today and taking pictures, I’ve been starting Picasa a lot.

So I finally knuckled down, ransacked Google and my backups, and have restored all but one of the albums! Yay, success. Picasa backups up the .pal files which represent the albums, and with some arcane copying too and fro you can convince Picasa to recreate them, although the behaviour seems inconsistent. No idea why one of them didn’t work – but much easier to rebuild one album manually than 10, and now they’re all back fully synced and online.

Graphing ngIRCd stats (users / servers / channels) with Munin

I run a little ngIRCd server (well two) for some friends to use (if you’re a friend, and you want to know more, mail me!)

I’m also addicted to numbers, and I’m always interested in monitoring the stuff I run, so I’ve written (adapted really) an existing script to track how many users are connected to the ngIRCd daemons using munin.

I’m hosting them on github (along with some other stuff I wrote, or am writing). If you’re interested, you can check them out (hah, get it? I made a version control joke!) here.

Exim4 (SMTP MTA) + Debian + Masquerading

I love Debian, and Exim4 seems to ‘just work’ for me most of the time, so I tend to use it for my MTA by preference. Debconf handles the basic options for Exim4 pretty well, and usually I don’t need to mess with anything.

However, on one of my VPS’s I wanted to do what I used to refer to as masquerading. I use the term to refer to having an SMTP masquerade as a different host on outbound e-mail addresses automatically. So the server may be fred.example.net, but all outgoing mail comes from user@example.net. It’s common if you want to handle the return mail via some other route – and for me I do. My servers are in the darkstorm.co.uk domain, but I don’t want them handling mail for somehostname.darkstorm.co.uk, and I don’t want to have to configure every user with a different address, I just wanted a simple way to get Exim to masquerade. Additionally, I only want external outbound mail re-writing, mail which is staying on the server should remain untouched. This allows bob to mail fred on the server, and fred to reply without the mail suddenly going off the server, but if bob mails bill@example.org, then his address is re-written correctly.

I think I spent some time looking at this a couple of years ago, and had the same experience as recently – it’s a bit frustrating tracking down the best place to do it. Firstly, Exim doesn’t have a masquerade option as such, and the manual doesn’t refer to masquerading in that way. What it does have is an extensive rewriting section in the config file and support for doing that rewriting in various ways.

On top of this, the Debian configuration of Exim can be a little daunting at first, and how you achieve the configuration may depend on whether you’re using a split config or the combined config.

Anyway, enough rambling, you can get Exim to rewrite outgoing mail / masquerade by setting one macro. This works on Debian 6 (Squeeze) with Exim4, but I assume it’ll work with Exim4 on any Debian installation.

Create (or edit)

/etc/exim4/exim4.conf.localmacros

Add the following line,

REMOTE_SMTP_HEADERS_REWRITE = *@hostname.example.net ${1}@example.net

Rebuild the Exim config (might not be essential but I do it every time anyway),

update-exim4.conf

and then recycle Exim (reload might work, but I tend to recycle stuff),

/etc/init.d/exim4 restart

That same macro is used for both the single monolithic config file, and the split config file. It tells Exim that for remote SMTP only, it should rewrite any header that matches the left part of the line with the replacement on the right. The ${1} on the right matches the * on the left (multiple *’s can be matched with ${1}, ${2}, etc.)

You can supply multiple rules by separating them with colons, such as this,

REMOTE_SMTP_HEADERS_REWRITE = *@hostname.example.net ${1}@example.net : *@hostname ${1}@example.net : *@localhost ${1}@example.net

There are more flags you can provide to the rewrite rules, and you can place rewrites in other locations, but the above will achieve the basic desire of ignoring locally delivered mail, but rewriting all headers on outbound e-mail which match.

Full details of Exim’s rewrite stuff is here. Details about using Exim4 with Debian can be found in Debian’s Exim4 readme (which you can read online here).

I’m sure there are other ways of achieving this, there’s certainly an option in the debconf config (dc_hide_mailname) which seems hopeful, but it didn’t seem to do anything for me (maybe it only works when you’re using a smart relay?) Either way, this option does what I wanted, and hey, this is UNIX, there’s always more than one way to skin a cat.

Edit: just had a look at the various Debian package files, and it looks like dc_hide_mailname only works if you’re using a smart relay option for Exim. If you’re using the full internet host option from Debconf, it never asks you if you want to hide the mailname, and ignores that option when you rebuild the config files.

SSH tunnelling made easy (part four)

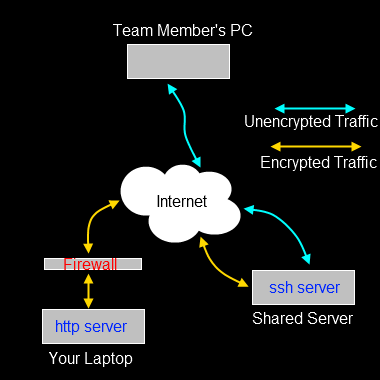

The first three parts of this series (one, two, three) covered using SSH to tunnel across various combinations of firewalls and other hops in a forward direction. By that, I mean you are using computer A and you’re trying to get to something on computer B or computer C. There is another type of problem that SSH tunnels can solve. What if you’re running a service on computer A but you can’t get to it because your network doesn’t allow any incoming connections? Maybe it’s a home server behind a NAT router and you can’t / don’t want to poke holes in the firewall? Maybe you’re in a cafe and no one can connect to your machine because the free wireless doesn’t allow it, but you want to share something on your local web server?

In those situations, you need reverse tunnels (or remote tunnels). There’s nothing magical about them, they just move traffic in the other direction while still being initiated from the same starting location.

Example 4 – reverse tunnel web server

In this example, we’ll use a reverse web tunnel to enable access to a host for which incoming connections are entirely blocked. You’re sitting with your laptop in a cafe, doing some work, and you want to show some team mates the new web site layout. Rather than having to check the code out to a public web server, you can just allow access to the web server you run on your local machine.

The assumption here is that you can SSH into the Shared Server and that your team mate can connect to the SSH server with their web browser.

Your team mate can’t browse to the web server on your laptop, because the cafe firewall quite sensibly gets in the way. What we need is a way to allow traffic from the SSH server into your laptop.

From your laptop, you create a reverse / remote tunnel (note -R, rather than -L),

ssh -R 203.0.113.34:9090:127.0.0.1:80 fred@203.0.113.34

I’ve used IP addresses in the tunnel so you can see what is going on. With regular tunnels, the first IP address and port are the local machine. With reverse tunnels, they are the interface and port on the remote server that are listening for traffic, the second IP address and port are the ones on the local machine to which that traffic is routed. So our reverse route above connects to the ssh server (203.0.113.34) and starts listening on that network interface (203.0.113.34) port 9090. Any traffic it gets on that port is routed over the tunnel into 127.0.0.1 port 80 (i.e. your local machine, port 80).

Your team mate can now point their browser at 203.0.113.34:9090 and will actually see the web server on your laptop. Because you created an outgoing connection through the firewall with the tunnel, the firewall is none-the-wiser, it simply sees regular SSH traffic flowing to and from the SSH server.

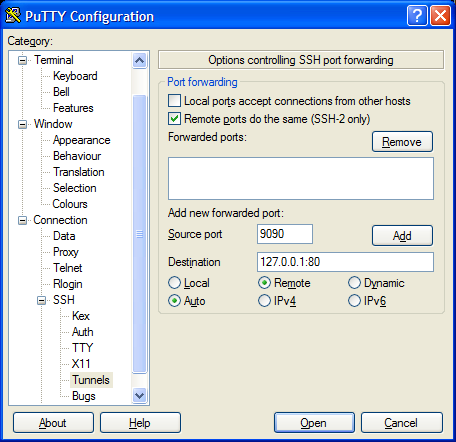

In PuTTY the setup would look like this,

The Remote ports option needs to be ticked so that the tunnel will listen to external interfaces on the target machine.

NB: In order to get reverse (or remote) tunnels working in this way, you need to ensure the SSH server to which you connect supports the feature. For OpenSSH that means you need to enable the ‘Gateway Ports’ open in the sshd_config file.

Virtual Machines – taking the pain out of major upgrades

If your computers are physical machines, where each piece of hardware runs a single OS image, then upgrading that OS image puts your services at risk or makes them unavailable for a period of time.

Sure, you have a development and test environment, where you can prove the process, but those machines cost money. So processes develop to either ensure you have a good backout, or you can make changes you know will work.

Virtual Machines have changed the game. I have a couple of Linux (Debian) based VM’s. They’re piddly little things that run some websites and a news server. They’re basically vanity VM’s, I don’t need them. I could get away with shared hosting, but I like having servers I can play with. It keeps my UNIX skills sharp, and let’s me learn new skills.

Debian have just released v6 (Squeeze). Debian’s release schedule is slow, but very controlled and it leads to hopefully, very stable servers. Rather than constantly update packages like you might find with other Linux distributions, Debian restricts updates to security patches only, and then every few years a new major release is made.

This is excellent, but it does introduce a lot of change in one go when you move from one release of Debian to the next. A lot of new features arrive, configuration files change in significant ways and you have to be careful with the upgrade process as a result.

For matrix (the VM that runs my news server), I took the plunge and ran through the upgrade. It mostly worked fine, although services were out for a couple of hours. I had to recompile some additional stuff not included in Debian, and had to learn a little bit about new options and features in some applications. Because the service is down, you’re doing that kind of thing in a reasonably pressured environment. But in the end, the upgrade was a success.

However, the tidy neat freak inside me knows that spread over that server are config files missing default options, or old copies of config files lying round I need to clean up; legacy stuff that is supported but depreciated sitting around just waiting to bite me in obscure ways later on.

So I decided to take a different approach with yoda (the server that runs most of the websites). I don’t need any additional hardware to run another server, it’s a VM. Gandi can provision one in about 8 minutes. So, I ordered a new clean Debian 6 VM. I set about installing the packages I needed, and making the config changes to support my web sites.

All told, that took about 4 hours. That’s still less time than the effort required to do an upgrade.

I structure the data on the web server in such a way that it’s easy to migrate (after lessons learned moving from Gradwell to 1and1 and then finally to Gandi), so I can migrate an entire website from one server to another in about 5 minutes, plus the time it takes for the DNS changes to propagate.

Now I have a nice clean server, running a fresh copy of Debian Squeeze without any of the confusion or trouble that can come from upgrades. I can migrate services across at my leisure, in a controlled way, and learn anything I need to about new features as I go (for example, I’ve switched away from Apache’s worker MPM and back to the prefork MPM).

Once the migration is done, I can shut down the old VM. I only pay Gandi for the hours or days that I have the extra VM running. There’s no risk to the services, if they fail on the new server I can just revert to providing them from the old.

Virtual Machines mean I don’t have to do upgrades in place, but equally I don’t have to have a lot of hardware assets knocking around just to support infrequent upgrades like this.

There are issues of course, one of the reasons I didn’t do this with matrix is that it has a lot of data present, and no trivial way to migrate it. Additionally, other servers using matrix are likely to have cached IP details beyond the content of DNS, which makes it less easy to move to a new image. But overall, I think the flexibility of VM’s certainly brings another aspect to major upgrades.